Now that Digital Lethargia and the Diglet RSS Reader have been live for a while, I figured there was a need to break up the content that gets loaded into pages or something similar. This will help keep the scroll length and the memory usage of the browser down. I also noticed that performance of fetching unread RSS articles was starting to suffer, and retrieving fewer rows at a time could help with that, although that problem was better helped by creating some extra indices on the databases as you will read below.

Let's start with pagination, which turns out to be a lot more complicated than you would think. It is especially hard to do on data that is coming in from multiple sources, and is mixed together into one view. I spent a long time trying to get some traditional methods to work, as well as some add-ons to GORM and GoLang in general, but got nowhere with it. Another method would be to do the dynamic loading that a lot of social media sites use, where new content is automatically loaded as you scroll towards the bottom of the page. This is a behaviour that I really do not like, as it gets in the way of getting to links at the bottom of the page (where a lot of RSS feed links are!) and I just don't like the idea of constantly chasing the end. The method that ended up working for me in the end is kind of a hybrid of this dynamic model and Cursor Pagination. So lets start with defining the two common methods of paginating results from a database:

Limit/Offset Pagination

This is the most common and basic method of pagination. It basically uses the formula of "Give me a list of items starting at the row number equal to the page number multiplied by the number of items per page". As you serve out the data, your "Limit" is the number of items per page, and your "Offset" is the Limit multiplied by the page number, giving you a query like the following:

SELECT * FROM items ORDER BY date DESC OFFSET 100 LIMIT 10

This works great on smaller data sets that are fairly static, and are easily ordered. But it does not scale very well, as once the offsets start getting large, the queries have to step over all the rows that you are skipping. Also, if items are inserted or deleted, they are easily missed as someone is paging through the data and the number of rows change.

Cursor Pagination

The concepts of Cursor Pagination are similar to Limit/Offset, except that instead of just dealing with the starting row and number of rows, you are keeping track of an identifier for the last item returned. For the next page, you are querying for values greater/less than that value. From a database perspective this is much more efficient, as finding your starting point using a WHERE clause is much quicker than giving an offset of rows to skip over. Another advantage to this method is that it prevents items being missed on active/dynamic datasets. If rows are being inserted/removed while people are trying to page through them, they won't be missed because we are continuing on from the value of where we left off, instead of a static number of rows. An example of a query statement for Cursor Pagination would be:

SELECT * FROM items ORDER BY date DESC WHERE date < cursorValue LIMIT 10

While it looks very close to the original one, it will be much faster in larger datasets, but you need to keep track of the values for the cursor between loads. One of the downsides to Cursor Pagination is that it is a bit more complex, especially if you also want to be able to return to previous pages.

This is basically the method I ended up using. Since you can mark items as read or saved for later, and the fact that each feed's articles will be returned in the order of their publish date as they get added, the data being returned is fairly dynamic, and would be fairly inaccurate if I used the Limit/Offset method. While it is possible to keep track of previous pages in Cursor Pagination, the hidden read/saved items in my dataset started to make that functionality really complex and I decided to abandon "pages" of data for a method closer to the dynamic loading I mentioned earlier. Instead of automatically loading the next set of data when you scroll, I added a "Load More" button that stays at the bottom. When clicked an AJAX request uses the cursor value to return the next set of data and it dynamically gets added to the end of the page.

How to Use

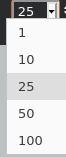

By default, the page size is set to 25 items.You can change this using the dropdown at the top right:

If there are more items than that count, a "Load More" link will appear below the last article. Simply click on that link to retrieve the next set of articles.

The sorting icon and behavior has changed a little. Click the icon ( ) at the top right to toggle the date sorting between descending (default) and ascending order.

) at the top right to toggle the date sorting between descending (default) and ascending order.

Indices

Splitting up the data into pages did help with the rendering time and memory usage in the browser, but did not help much with the loading times overall. Since I am not very experienced on the database design side I figured I had a great deal that could be improved there. I started by doing some research into creating indices in MySQL to see if that would help. An index for MySQL is basically a separately maintained BTree (in most cases) of the values you want that are kept in order to allow for faster lookups. Indices can slow down the writes of a table since it also gets updated with every INSERT, but can greatly increase the speed of lookups. There was currently no issue with write speeds in the system, so I continued.

When creating the list of articles to display for a user on the front page, the system is getting all the articles for each feed that they follow, and omitting any of them marked as read or saved by the user through a JOIN to a separate table. I assumed that this was the piece that was slowing things down the most, so I created a multi-column index on the articles table based on the feed Id, published status and publish date. I also created one on the table that keeps track of items read/saved based on the user Id and the article id. As soon as the indices were populated, the load times of the front page improved by over 5x. Success!

Changes committed since last Dev Diary

- You can now collapse the sidebar using the (

) icon

) icon - Added social links (YouTube, Twitch, and Twitter) to menu

- Pagination implemented (See above for instructions)

- Fix for overflow on long article titles.

- Added BBS and Help pages

- Added support tickets for logged in users

- Fixed security bug with public groups

- Log cleanup job.

References

- Limit/Offset and Cursor Pagination: https://dev.to/jackmarchant/offset-and-cursor-pagination-explained-b89

- Cursor Pagination: https://medium.com/swlh/how-to-implement-cursor-pagination-like-a-pro-513140b65f32

- Indices: https://www.tutorialspoint.com/mysql/mysql-indexes.htm